Enhancing User Interaction With First Person User Interface

But sometimes, it makes sense to think of the real world as an interface. To design user interactions that make use of how people actually see the world -to take advantage of first person user interfaces.

You might be interested in the following related posts:

- Legal Guidelines For The Use Of Location Data On The Web

- Creating An Adaptive System To Enhance UX

- Lessons Learned From An App Graveyard

- Designing For Smartwatches And Wearables To Enhance Real-Life Experience

First person user interfaces can be a good fit for applications that allow people to navigate the real world, “augment” their immediate surroundings with relevant information, and interact with objects or people directly around them.

Navigating the Real World

The widespread integration of location detection technologies (like GPS and cell tower triangulation) has made mobile applications that know where you are commonplace. Location-aware applications can help you find nearby friends or discover someplace good to eat by pinpointing you on a map.

When coupled with a digital compass (or a similar technology) that can detect your orientation, things get even more interesting. With access to location and orientation, software applications not only know where you are but where you are facing as well.

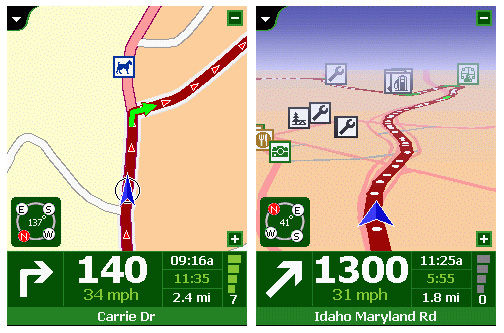

This may seem like a small detail but it opens up a set of new interface possibilities that are designed from your current perspective. Consider the difference between the two screens from the TomTom navigation system shown below. The screen on the left provides a two-dimensional, overhead view of a driver’s current position and route. The screen on the right provides the same information but from a first person perspective.

This first person user interface mirrors your perspective of the world, which hopefully allows you to more easily follow a route. When people are in motion, first person interfaces can help them orient quickly and stay on track without having to translate two-dimensional information to the real world.

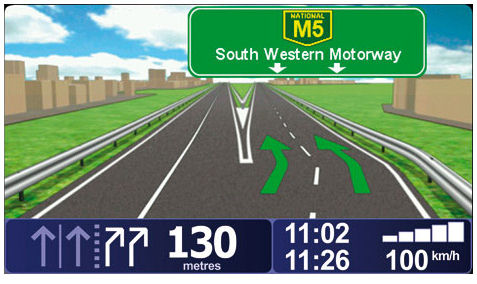

TomTom’s latest software version goes even further toward mirroring our perspective of the world by using colors and graphics that more accurately match real surroundings. But why re-draw the world when you can provide navigation information directly on it?

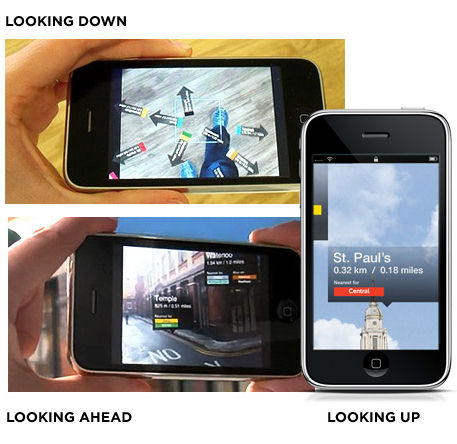

Nearest Tube is a first person navigation application that tells you where the closet subway station is by displaying navigation markers on the real world as seen through your phone’s camera.

As you can see in the video above, the application places pointers to each subway station in your field of vision so you can quickly determine which direction to go. It’s important to note, however, that the application actually provides different information depending on your orientation.

When you hold the phone flat and look down, a set of arrows directs you to each subway line. Holding the phone in front of you shows the nearest subway stations and how far away they are. Tilting the phone upwards shows stations further away from you.

Making use of the multiple perspectives naturally available to you (looking down, looking ahead, looking up) is an example of how first person interfaces allow us to interact with software in a way that is guessable, physical, and realistic. Another approach (used in Google Street View) is to turn real world elements into interface elements.

Street View enables people to navigate the World using actual photographs of many major towns & cities. Previously, moving through these images was only possible by clicking on forward and back arrows overlaid on top of the photos. Now, (as you can see in the demo video below) Street View allows you to use the real-world images themselves to navigate around. Just place a cursor on the actual building or point on the map that you want to view and double-click.

Augmented Reality

Not only can first person user interfaces help us move through the world, they can also help us understand it. The information that applications like Nearest Tube overlay on the World can be thought of as ÒaugmentingÓ our view of reality. Augmented reality applications are a popular form of first person interfaces that enhance the real world with information not visible to the naked eye. These applications present user interface elements on top of images of the real world using a camera or heads up display.

For example, an application could augment the real world with information such as ratings and reviews or images of food for restaurants in our field of vision. In fact, lots of different kinds of information can be presented from a first person perspective in a way that enhances reality.

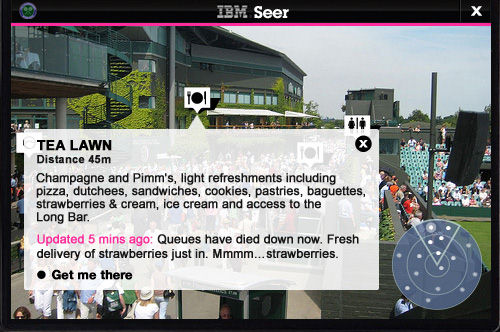

IBM’s Seer application provides a way to navigate this year’s Wimbledon tennis tournament more effectively. Not only does the application include navigation tools but it also augments your field of vision with useful information like the waiting times at taxi and concession stands.

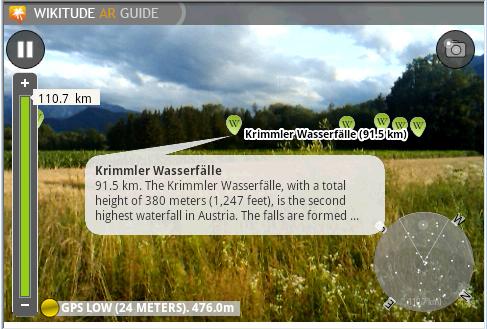

Wikitude is an application that displays landmarks, points of interest, and historic information wherever you point your phone’s camera. This not only provides rich context about your surroundings, it also helps you discover new places and history.

These augmented reality applications share a number of design features. Both IBM Seer and Wikitude include a small indicator (in the bottom right corner of the screen) that lets you know what direction you are facing and how many points of interest are located near you. This overview gives you a quick sense of what information is available. Ideally, the data in this overview can be manipulated to zoom further out or closer in, adjust search filters, and even select specific elements.

Wikitude allows you to manage the size of this overview radius through a zoom in/out control on the left side of the screen. This allows you to focus on points of interest near you or look further out. Since it is dealing with a much smaller area (the Wimbledon grounds), IBM Seer doesn’t include (or need) this feature.

In both applications, the primary method for selecting information of interest is by clicking on the icons overlaid on the camera’s view port. In the case of IBM Seer, different icons indicate different kinds of information like concessions or restrooms. In Wikitude, all the icons are the same and indicate information of interest and distance from you. Selecting any of these icons brings up a preview of the information. In most augmented reality applications, a further information window or screen is necessary to access more details than the camera view can display.

When many different types of information can be used to augment reality within a single application, it’s a good idea to allow people to select what kinds of information they want visible. Otherwise, too many information points can quickly overwhelm the interface.

Layar is an augmented reality application that allows you to select what kinds of information should be displayed within your field of vision at any time. The application currently allows you to see houses for sale and rent, local business information, available jobs, ATM locations, health care providers, and more. As the video below highlights, you can switch between layers that display these information points by clicking on the arrows on the right and left sides of the screen.

Layar also provides quick access to search filters that allow you to change the criteria for what shows up on screen. This helps narrow down what is showing up in front of you.

Interacting with Things Near You

First person user interfaces aren’t limited to helping you navigate or better understand the physical space around you -they can also enable you to interact directly with the people and objects residing within that space. In most cases, the prerequisite for these kinds of interactions is identifying what (or who) is near you. As a result, most of the early applications in this category are focused on getting that part of things right first.

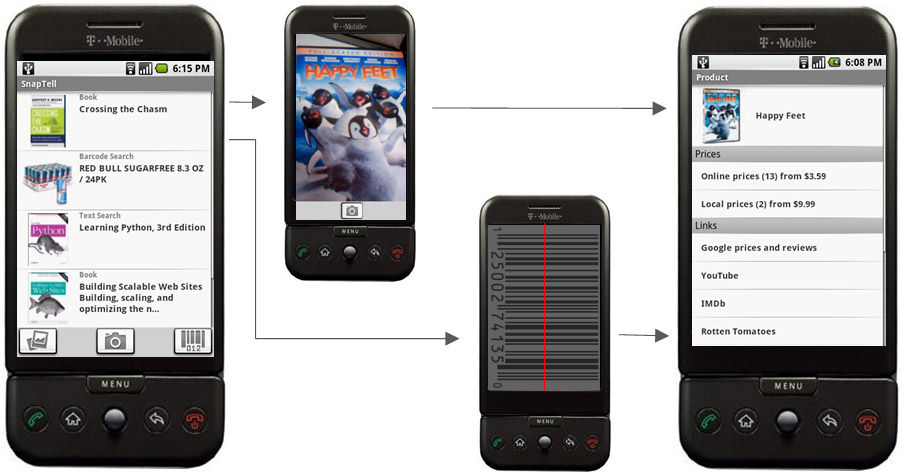

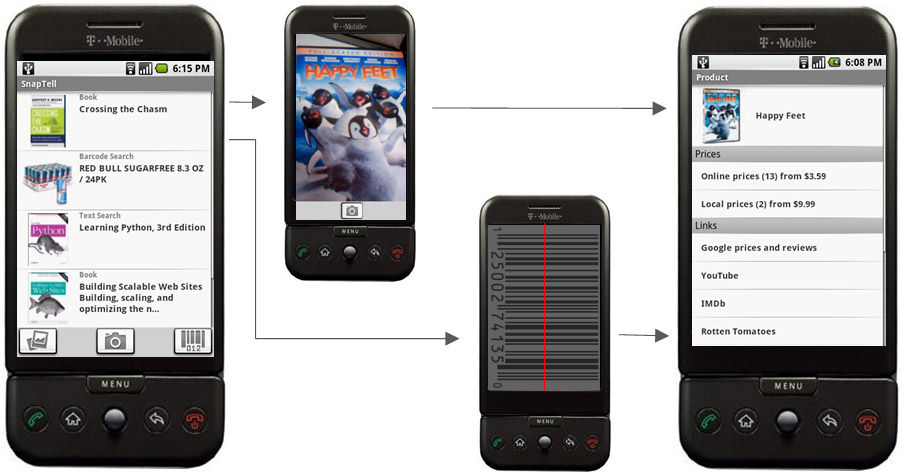

One way to identify objects near you is to explicitly provide information about them to an application. An application like SnapTell can identify popular products like DVDs, CDs, video games, and books when you take a picture of the product or its barcode. The application can then return prices, reviews, and more to you.

This approach might eventually also be used to identify people as illustrated in the augmented ID concept application from TAT in the video below. This proposed application uses facial recognition to identify people near you and provides access to digital information about them like their social networking profiles and updates.

While taking pictures of objects or people to get information about them is a more direct interaction with the real world than typing a keyword into a search engine, it only partially takes advantage of first person perspective. Perhaps it’s better to use the objects themselves as the interface.

For example, if any of the objects near you can transmit information using technologies like RFID tags, an application can simply listen to how these objects identify themselves and act accordingly. Compare the process of inputting a barcode or picture of an object to the one illustrated in this video from the Touch research project. Simply move your device near an RFID tagged object and the application can provide the right information or actions for that object to you.

This form of first person interface enables physical and realistic interactions with objects. Taking this idea even further, information can be displayed on the objects themselves instead of on a device. The 6th Sense project from the MIT Media Lab does just that by using a wearable projector to display product information on the actual products you find in a library or store.

Though some of these first person interfaces are forward-looking, most are available now and ready to help people navigate the real world, “augment” their immediate surroundings, and interact with objects or people directly around them. What’s going to come next is likely to be even more exciting.

The next time you are working on a software application, consider if a first person user interface could help provide a more natural experience for your users by bringing the real world and virtual world closer together.

SurveyJS: White-Label Survey Solution for Your JS App

SurveyJS: White-Label Survey Solution for Your JS App Agent Ready is the new Headless

Agent Ready is the new Headless