Front-End Performance 2021: Assets Optimizations

This guide has been kindly supported by our friends at LogRocket, a service that combines frontend performance monitoring, session replay, and product analytics to help you build better customer experiences. LogRocket tracks key metrics, incl. DOM complete, time to first byte, first input delay, client CPU and memory usage. Get a free trial of LogRocket today.

Table Of Contents

- Getting Ready: Planning And Metrics

- Setting Realistic Goals

- Defining The Environment

- Assets Optimizations

- Build Optimizations

- Delivery Optimizations

- Networking, HTTP/2, HTTP/3

- Testing And Monitoring

- Quick Wins

- Everything on one page

- Download The Checklist (PDF, Apple Pages, MS Word)

- Subscribe to our email newsletter to not miss the next guides.

Assets Optimizations

- Use Brotli for plain text compression.

In 2015, Google introduced Brotli, a new open-source lossless data format, which is now supported in all modern browsers. The open sourced Brotli library, that implements an encoder and decoder for Brotli, has 11 predefined quality levels for the encoder, with higher quality level demanding more CPU in exchange for a better compression ratio. Slower compression will ultimately lead to higher compression rates, yet still, Brotli decompresses fast. It’s worth noting though that Brotli with the compression level 4 is both smaller and compresses faster than Gzip.In practice, Brotli appears to be much more effective than Gzip. Opinions and experiences differ, but if your site is already optimized with Gzip, you might be expecting at least single-digit improvements and at best double-digits improvements in size reduction and FCP timings. You can also estimate Brotli compression savings for your site.

Browsers will accept Brotli only if the user is visiting a website over HTTPS. Brotli is widely supported, and many CDNs support it (Akamai, Netlify Edge, AWS, KeyCDN, Fastly (currently only as a pass-through), Cloudflare, CDN77) and you can enable Brotli even on CDNs that don’t support it yet (with a service worker).

The catch is that because compressing all assets with Brotli at a high compression level is expensive, many hosting providers can’t use it at fule scall just because of the huge cost overhead it produces. In fact, at the highest level of compression, Brotli is so slow that any potential gains in file size could be nullified by the amount of time it takes for the server to begin sending the response as it waits to dynamically compress the asset. (But if you have time during the build time with static compression, of course, higher compression settings are preferred.)

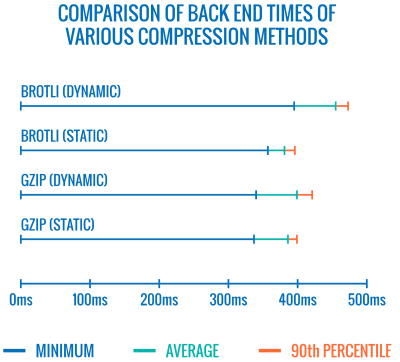

Comparison of back end times of various compression methods. Unsurprisingly, Brotli is slower than gzip (for now). (Large preview) This might be changing though. The Brotli file format includes a built-in static dictionary, and in addition to containing various strings in multiple languages, it also supports the option to apply multiple transformations to those words, increasing its versatility. In his research, Felix Hanau has discovered a way to improve the compression at levels 5 through 9 by using "a more specialized subset of the dictionary than the default" and relying on the

Content-Typeheader to tell the compressor if it should use a dictionary for HTML, JavaScript or CSS. The result was a "negligible performance impact (1% to 3% more CPU compared to 12% normally) when compressing web content at high compression levels, using a limited dictionary use approach."

With the improved dictionary approach, we can compress assets faster on higher compression levels, all while using only 1% to 3% more CPU. Normally, compression level 6 over 5 would increase CPU usage by up to 12%. (Large preview) On top of that, with Elena Kirilenko’s research, we can achieve fast and efficient Brotli recompression using previous compression artifacts. According to Elena, "once we have an asset compressed via Brotli, and we’re trying to compress dynamic content on-the-fly, where the content resembles content available to us ahead of time, we can achieve significant improvements in compression times."

How often is it the case? E.g. with delivery of JavaScript bundles subsets (e.g. when parts of the code are already cached on the client or with dynamic bundle serving with WebBundles). Or with dynamic HTML based on known-in-advance templates, or dynamically subsetted WOFF2 fonts. According to Elena, we can get 5.3% improvement on compression and 39% improvement on compression speed when removing 10% of the content, and 3.2% better compression rates and 26% faster compression, when removing 50% of the content.

Brotli compression is getting better, so if you can bypass the cost of dynamically compressing static assets, it’s definitely worth the effort. It goes without saying that Brotli can be used for any plaintext payload — HTML, CSS, SVG, JavaScript, JSON, and so on.

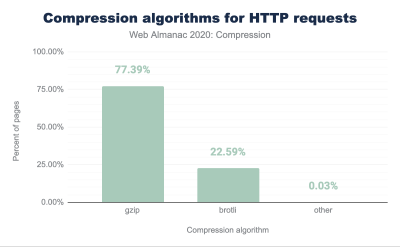

Note: as of early 2021, approximately 60% of HTTP responses are delivered with no text-based compression, with 30.82% compressing with Gzip, and 9.1% compressing with Brotli (both on mobile and on desktop). E.g., 23.4% of Angular pages are not compressed (via gzip or Brotli). Yet often turning on compression is one of the easiest wins to improve performance with a simple flip of a switch.

The strategy? Pre-compress static assets with Brotli+Gzip at the highest level and compress (dynamic) HTML on the fly with Brotli at level 4–6. Make sure that the server handles content negotiation for Brotli or Gzip properly.

- Do we use adaptive media loading and client hints?

It’s coming from the land of old news, but it’s always a good reminder to use responsive images withsrcset,sizesand the `` element. Especially for sites with a heavy media footprint, we can take it a step further with adaptive media loading (in this example React + Next.js), serving light experience to slow networks and low-memory devices and full experience to fast network and high-memory devices. In the context of React, we can achieve it with client hints on the server and react-adaptive-hooks on the client. The future of responsive images might change dramatically with the wider adoption of client hints. Client hints are HTTP request header fields, e.g.

DPR,Viewport-Width,Width,Save-Data,Accept(to specify image format preferences) and others. They are supposed to inform the server about the specifics of user’s browser, screen, connection etc.As a result, the server can decide how to fill in the layout with appropriately sized images, and serve only these images in desired formats. With client hints, we move the resource selection from HTML markup and into the request-response negotiation between the client and server.

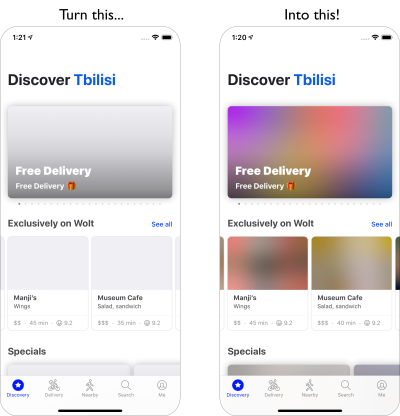

Adaptive media serving in use. We send a placeholder with text to users who are offline, a low-resolution image to 2G users, a high-resolution image to 3G users and an HD video to 4G users. Via Loading web pages fast on a $20 feature phone. (Large preview) As Ilya Grigorik noted a while back, client hints complete the picture — they aren’t an alternative to responsive images. "The `

` element provides the necessary art-direction control in the HTML markup. Client hints provide annotations on resulting image requests that enable resource selection automation. Service Worker provides full request and response management capabilities on the client." A service worker could, for example, append new client hints headers values to the request, rewrite the URL and point the image request to a CDN, adapt response based on connectivity and user preferences, etc. It holds true not only for image assets but for pretty much all other requests as well.

For clients that support client hints, one could measure 42% byte savings on images and 1MB+ fewer bytes for 70th+ percentile. On Smashing Magazine, we could measure 19-32% improvement, too. Client hints are supported in Chromium-based browsers, but they are still under consideration in Firefox.

However, if you supply both the normal responsive images markup and the `` tag for Client Hints, then a supporting browser will evaluate the responsive images markup and request the appropriate image source using the Client Hints HTTP headers.

- Do we use responsive images for background images?

We surely should! Withimage-set, now supported in Safari 14 and in most modern browsers except Firefox, we can serve responsive background images as well:background-image: url("fallback.jpg"); background-image: image-set( "photo-small.jpg" 1x, "photo-large.jpg" 2x, "photo-print.jpg" 600dpi);Basically we can conditionally serve low-resolution background images with a

1xdescriptor, and higher-resolution images with2xdescriptor, and even a print-quality image with600dpidescriptor. Beware though: browsers do not provide any special information on background images to assistive technology, so ideally these photos would be merely decoration. - Do we use WebP?

Image compression is often considered a quick win, yet it’s still heavily underutilized in practice. Of course images do not block rendering, but they contribute heavily to poor LCP scores, and very often they are just too heavy and too large for the device they are being consumed on.So at the very least, we could explore using the WebP format for our images. In fact, the WebP saga has been nearing the end last year with Apple adding support for WebP in Safari 14. So after many years of discussions and debates, as of today, WebP is supported in all modern browsers. So we can serve WebP images with the `

` element and a JPEG fallback if needed (see Andreas Bovens' code snippet) or by using content negotiation (using Acceptheaders).WebP isn’t without its downsides though. While WebP image file sizes compared to equivalent Guetzli and Zopfli, the format doesn’t support progressive rendering like JPEG, which is why users might see the finished image faster with a good ol' JPEG although WebP images might be getting faster through the network. With JPEG, we can serve a "decent" user experience with the half or even quarter of the data and load the rest later, rather than have a half-empty image as it is in the case of WebP.

Your decision will depend on what you are after: with WebP, you’ll reduce the payload, and with JPEG you’ll improve perceived performance. You can learn more about WebP in WebP Rewind talk by Google’s Pascal Massimino.

For conversion to WebP, you can use WebP Converter, cwebp or libwebp. Ire Aderinokun has a very detailed tutorial on converting images to WebP, too — and so does Josh Comeau in his piece on embracing modern image formats.

A thorough talk about WebP: WebP Rewind by Pascal Massimino. (Large preview) Sketch natively supports WebP, and WebP images can be exported from Photoshop using a WebP plugin for Photoshop. But other options are available, too.

If you’re using WordPress or Joomla, there are extensions to help you easily implement support for WebP, such as Optimus and Cache Enabler for WordPress and Joomla’s own supported extension (via Cody Arsenault). You can also abstract away the

pictureelement with React, styled components or gatsby-image.Ah — shameless plug! — Jeremy Wagner even published a Smashing book on WebP which you might want to check if you are interested about everything around WebP.

- Do we use AVIF?

You might have heard the big news: AVIF has landed. It’s a new image format derived from the keyframes of AV1 video. It’s an open, royalty-free format that supports lossy and lossless compression, animation, lossy alpha channel and can handle sharp lines and solid colors (which was an issue with JPEG), while providing better results at both.In fact, compared to WebP and JPEG, AVIF performs significantly better, yielding median file size savings for up to 50% at the same DSSIM ((dis)similarity between two or more images using an algorithm approximating human vision). In fact, in his thorough post on optimizing image loading, Malte Ubl notes that AVIF "very consistently outperforms JPEG in a very significant way. This is different from WebP which doesn’t always produce smaller images than JPEG and may actually be a net-loss due to lack of support for progressive loading."

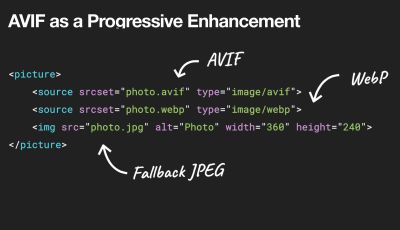

We can use AVIF as a progressive enhancement, delivering WebP or JPEG or PNG to older browsers. (Large preview). See plain text view below. Ironically, AVIF can perform even better than large SVGs although of course it shouldn’t be seen as a replacement to SVGs. It is also one of the first image formats to support HDR color support; offering higher brightness, color bit depth, and color gamuts. The only downside is that currently AVIF doesn’t support progressive image decoding (yet?) and, similarly to Brotli, a high compression rate encoding is currently quite slow, although decoding is fast.

AVIF is currently supported in Chrome, Firefox and Opera, and the support in Safari expected to be coming soon (as Apple is a member of the group that created AV1).

What’s the best way to serve images these days then? For illustrations and vector images, (compressed) SVG is undoubtedly the best choice. For photos, we use content negotiation methods with the

pictureelement. If AVIF is supported, we send an AVIF image; if it’s not the case, we fall back to WebP first, and if WebP isn’t supported either, we switch to JPEG or PNG as fallback (applying@mediaconditions if needed):<picture> <source srcset="image.avif" type="image/avif"> <source srcset="image.webp" type="image/webp"> <img src="image.jpg" alt="Photo" width="450" height="350"> </picture>Frankly, it’s more likely that we’ll be using some conditions within the

pictureelement though:<picture> <source sizes="(max-width: 608px) 100vw, 608px" srcset=" /img/Z1s3TKV-1920w.avif 1920w, /img/Z1s3TKV-1280w.avif 1280w, /img/Z1s3TKV-640w.avif 640w, /img/Z1s3TKV-320w.avif 320w" type="image/avif" /> <source sizes="(max-width: 608px) 100vw, 608px" srcset=" /img/Z1s3TKV-1920w.webp 1920w, /img/Z1s3TKV-1280w.webp 1280w, /img/Z1s3TKV-640w.webp 640w, /img/Z1s3TKV-320w.webp 320w" type="image/webp" /> <source sizes="(max-width: 608px) 100vw, 608px" srcset=" /img/Z1s3TKV-1920w.jpg 1920w, /img/Z1s3TKV-1280w.jpg 1280w, /img/Z1s3TKV-640w.jpg 640w, /img/Z1s3TKV-320w.jpg 320w" type="image/jpeg" /> <img src="fallback-image.jpg" alt="Photo" width="450" height="350"> </picture>You can go even further by swapping animated images with static images for customers who opt-in for less motion with

prefers-reduced-motion:<picture> <source media="(prefers-reduced-motion: reduce)" srcset="no-motion.avif" type="image/avif"></source> <source media="(prefers-reduced-motion: reduce)" srcset="no-motion.jpg" type="image/jpeg"></source> <source srcset="motion.avif" type="image/avif"></source> <img src="motion.jpg" alt="Animated AVIF"> </picture>Over the couple of months, AVIF has gained quite some traction:

- We can test WebP/AVIF fallbacks in the Rendering panel in DevTools.

- We can use Squoosh, AVIF.io and libavif to encode, decode, compress and convert AVIF files.

- We can use Jake Archibald’s AVIF Preact component that decodes an AVIF-file in a worker and displays the result on a canvas,

- To deliver AVIF only to supporting browsers, we can use a PostCSS plugin along with a 315B-script to use AVIF in your CSS declarations.

- We can progressively deliver new image formats with CSS and Cloudlare Workers to dynamically alter the returned HTML document, inferring information from the

acceptheader, and then add thewebp/avifetc. classes as appropriate. - AVIF is already available in Cloudinary (with usage limits), Cloudflare supports AVIF in Image Resizing, and you can enabled AVIF with Custom AVIF Headers in Netlify.

- When it comes to animation, AVIF performs as well as Safari’s

img src=mp4, outperforming GIF and WebP at large, but MP4 still performs better. - In general, for animations, AVC1 (h264) > HVC1 > WebP > AVIF > GIF, assuming that Chromium-based browsers will ever support

img src=mp4. - You can find more details about AVIF in AVIF for Next Generation Image Coding talk by Aditya Mavlankar from Netflix, and The AVIF Image Format talk by Cloudflare’s Kornel Lesiński.

- A great reference for everything AVIF: Jake Archibald’s comprehensive post on AVIF has landed.

So is the future AVIF then? Jon Sneyers disagrees: AVIF performs 60% worse than JPEG XL, another free and open format developed by Google and Cloudinary. In fact, JPEG XL seems to be performing way better across the board. However, JPEG XL is still only in the final stages of standardization, and does not yet work in any browser. (Not to mix up with Microsoft’s JPEG-XR coming from good ol' Internet Explorer 9 times).

- Are JPEG/PNG/SVGs properly optimized?

When you’re working on a landing page on which it’s critical that a hero image loads blazingly fast, make sure that JPEGs are progressive and compressed with mozJPEG (which improves the start rendering time by manipulating scan levels) or Guetzli, Google’s open-source encoder focusing on perceptual performance, and utilizing learnings from Zopfli and WebP. The only downside: slow processing times (a minute of CPU per megapixel).For PNG, we can use Pingo, and for SVG, we can use SVGO or SVGOMG. And if you need to quickly preview and copy or download all the SVG assets from a website, svg-grabber can do that for you, too.

Every single image optimization article would state it, but keeping vector assets clean and tight is always worth mentioning. Make sure to clean up unused assets, remove unnecessary metadata and reduce the number of path points in artwork (and thus SVG code). (Thanks, Jeremy!)

There are also useful online tools available though:

- Use Squoosh to compress, resize and manipulate images at the optimal compression levels (lossy or lossless),

- Use Guetzli.it to compress and optimize JPEG images with Guetzli, which works well for images with sharp edges and solid colors (but might be quite a bit slower)).

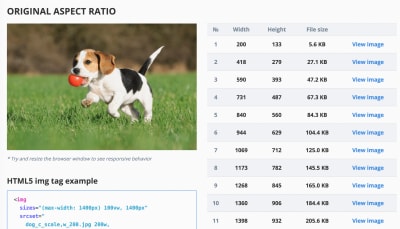

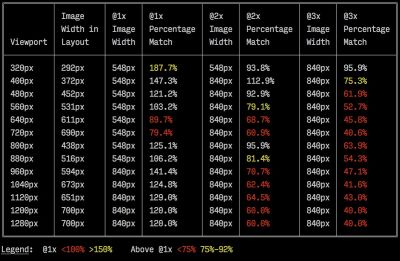

- Use the Responsive Image Breakpoints Generator or a service such as Cloudinary or Imgix to automate image optimization. Also, in many cases, using

srcsetandsizesalone will reap significant benefits. - To check the efficiency of your responsive markup, you can use imaging-heap, a command line tool that measure the efficiency across viewport sizes and device pixel ratios.

- You can add automatic image compression to your GitHub workflows, so no image can hit production uncompressed. The action uses mozjpeg and libvips that work with PNGs and JPGs.

- To optimize storage interally, you could use Dropbox’s new Lepton format for losslessly compressing JPEGs by an average of 22%.

- Use BlurHash if you’d like to show a placeholder image early. BlurHash takes an image, and gives you a short string (only 20-30 characters!) that represents the placeholder for this image. The string is short enough that it can easily be added as a field in a JSON object.

BlurHash is a tiny, compact representation of a placeholder for an image. (Large preview) Sometimes optimizing images alone won’t do the trick. To improve the time needed to start the rendering of a critical image, lazy-load less important images and defer any scripts to load after critical images have already rendered. The most bulletproof way is hybrid lazy-loading, when we utilize native lazy-loading and lazyload, a library that detects any visibility changes triggered through user interaction (with IntersectionObserver which we’ll explore later). Additionally:

- Consider preloading critical images, so a browser doesn’t discover them too late. For background images, if you want to be even more aggressive than that, you can add the image as a regular image with

img src, and then hide it off the screen. - Consider Swapping Images with the Sizes Attribute by specifying different image display dimensions depending on media queries, e.g. to manipulate

sizesto swap sources in a magnifier component. - Review image download inconsistencies to prevent unexpected downloads for foreground and background images. Watch out for images that are loaded by default, but might never be displayed — e.g. in carousels, accordions and image galleries.

- Make sure to always set

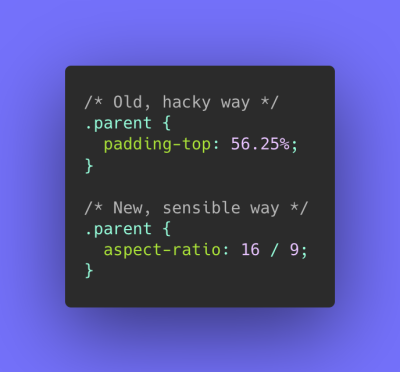

widthandheighton images. Watch out for theaspect-ratioproperty in CSS andintrinsicsizeattribute which will allow us to set aspect ratios and dimensions for images, so browser can reserve a pre-defined layout slot early to avoid layout jumps during the page load.

Should be just a matter of weeks or months now, with aspect-ratio landing in browsers. In Safari Technical Preview 118 already. Currently behind the flag in Firefox and Chrome. (Large preview) If you feel adventurous, you could chop and rearrange HTTP/2 streams using Edge workers, basically a real-time filter living on the CDN, to send images faster through the network. Edge workers use JavaScript streams that use chunks which you can control (basically they are JavaScript that runs on the CDN edge that can modify the streaming responses), so you can control the delivery of images.

With a service worker, it’s too late as you can’t control what’s on the wire, but it does work with Edge workers. So you can use them on top of static JPEGs saved progressively for a particular landing page.

A sample output by imaging-heap, a command line tool that measure the efficiency across viewport sizes and device pixel ratios. (Image source) (Large preview) Not good enough? Well, you can also improve perceived performance for images with the multiple background images technique. Keep in mind that playing with contrast and blurring out unnecessary details (or removing colors) can reduce file size as well. Ah, you need to enlarge a small photo without losing quality? Consider using Letsenhance.io.

These optimizations so far cover just the basics. Addy Osmani has published a very detailed guide on Essential Image Optimization that goes very deep into details of image compression and color management. For example, you could blur out unnecessary parts of the image (by applying a Gaussian blur filter to them) to reduce the file size, and eventually you might even start removing colors or turn the picture into black and white to reduce the size even further. For background images, exporting photos from Photoshop with 0 to 10% quality can be absolutely acceptable as well.

On Smashing Magazine, we use the postfix

-optfor image names — for example,brotli-compression-opt.png; whenever an image contains that postfix, everybody on the team knows that the image has already been optimized.Ah, and don’t use JPEG-XR on the web — "the processing of decoding JPEG-XRs software-side on the CPU nullifies and even outweighs the potentially positive impact of byte size savings, especially in the context of SPAs" (not to mix up with Cloudinary/Google’s JPEG XL though).

- Are videos properly optimized?

We covered images so far, but we’ve avoided a conversation about good ol' GIFs. Despite our love for GIFs, it’s really the time to abandon them for good (at least in our websites and apps). Instead of loading heavy animated GIFs which impact both rendering performance and bandwidth, it’s a good idea to switch either to animated WebP (with GIF being a fallback) or replace them with looping HTML5 videos altogether.Unlike with images, browsers do not preload

videocontent, but HTML5 videos tend to be much lighter and smaller than GIFs. Not an option? Well, at least we can add lossy compression to GIFs with Lossy GIF, gifsicle or giflossy.Tests by Colin Bendell show that inline videos within

imgtags in Safari Technology Preview display at least 20× faster and decode 7× faster than the GIF equivalent, in addition to being a fraction in file size. However, it’s not supported in other browsers.In the land of good news, video formats have been advancing massively over the years. For a long time, we had hoped that WebM would become the format to rule them all, and WebP (which is basically one still image inside of the WebM video container) will become a replacement for dated image formats. Indeed, Safari is now supporting WebP, but despite WebP and WebM gaining support these days, the breakthrough didn’t really happen.

Still, we could use WebM for most modern browsers out there:

<!-- By Houssein Djirdeh. https://web.dev/replace-gifs-with-videos/ --> <!-- A common scenartio: MP4 with a WEBM fallback. --> <video autoplay loop muted playsinline> <source src="my-animation.webm" type="video/webm"> <source src="my-animation.mp4" type="video/mp4"> </video>But perhaps we could revisit it altogether. In 2018, the Alliance of Open Media has released a new promising video format called AV1. AV1 has compression similar to the H.265 codec (the evolution of H.264) but unlike the latter, AV1 is free. The H.265 license pricing pushed browser vendors to adopt a comparably performant AV1 instead: AV1 (just like H.265) compresses twice as good as WebM.

AV1 has good chances of becoming the ultimate standard for video on the web. (Image credit: Wikimedia.org) (Large preview) In fact, Apple currently uses HEIF format and HEVC (H.265), and all the photos and videos on the latest iOS are saved in these formats, not JPEG. While HEIF and HEVC (H.265) aren’t properly exposed to the web (yet?), AV1 is — and it’s gaining browser support. So adding the

AV1source in yourvideotag is reasonable, as all browser vendors seem to be on board.For now, the most widely used and supported encoding is H.264, served by MP4 files, so before serving the file, make sure that your MP4s are processed with a multipass-encoding, blurred with the frei0r iirblur effect (if applicable) and moov atom metadata is moved to the head of the file, while your server accepts byte serving. Boris Schapira provides exact instructions for FFmpeg to optimize videos to the maximum. Of course, providing WebM format as an alternative would help, too.

Need to start rendering videos faster but video files are still too large? For example, whenever hou have a large background video on a landing page? A common technique to use is to show the very first frame as a still image first, or display a heavily optimized, short looping segment that could be interpreted as a part of the video, and then, whenever the video is buffered enough, start playing the actual video. Doug Sillars has a written a detailed guide to background video performance that could be helpful in that case. (Thanks, Guy Podjarny!).

For the above scenario, you might want to provide responsive poster images. By default,

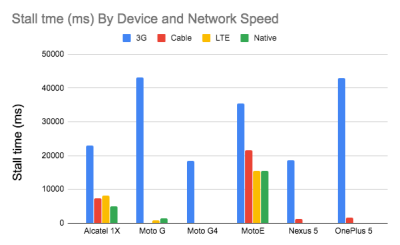

videoelements only allow one image as the poster, which isn’t necessarily optimal. We can use Responsive Video Poster, a JavaScript library that allows you to use different poster images for different screens, while also adding a transitioning overlay and full styling control of video placeholders.The research shows that video stream quality impacts viewer behavior. In fact, viewers start to abandon the video if the startup delay exceeds about 2 seconds. Beyond that point, a 1-second increase in delay results in roughly a 5.8% increase in abandonment rate. So it’s not surprising that the median video start time is 12.8s, with 40% of videos having at least 1 stall, and 20% at least 2s of stalled video playback. In fact, video stalls are unavoidable on 3G as videos play back faster than the network can supply content.

So, what’s the solution? Usually small screen devices cannot handle the 720p and 1080p we are serving the desktop. According to Doug Sillars, we can either create smaller versions of our videos, and use Javascript to detect the source for smaller screens to ensure a fast and smooth playback on these devices. Alternatively, we can use streaming video. HLS video streams will deliver an appropriately sized video to the device — abstracting the need to create different videos for different screens. It will also negotiate the network speed, and adapt the video bitrate for the speed of the network you are using.

To avoid the waste on bandwidth, we could only add the video source for devices that actually can play the video well. Alternatively, we can remove the

autoplayattribute from thevideotag altogether and use JavaScript to insertautoplayfor larger screens. Additionally, we need to addpreload="none"onvideoto tell the browser to not download any of the video files until it actually needs the file:<!-- Based on Doug Sillars's post. https://dougsillars.com/2020/01/06/hiding-videos-on-the-mbile-web/ --> <video id="hero-video" preload="none" playsinline muted loop width="1920" height="1080" poster="poster.jpg"> <source src="video.webm" type="video/webm"> <source src="video.mp4" type="video/mp4"> </video>Then we can target specifically browsers that actually support AV1:

<!-- Based on Doug Sillars's post. https://dougsillars.com/2020/01/06/hiding-videos-on-the-mbile-web/ --> <video id="hero-video" preload="none" playsinline muted loop width="1920" height="1080" poster="poster.jpg"> <source src="video.av1.mp4" type="video/mp4; codecs=av01.0.05M.08"> <source src="video.hevc.mp4" type="video/mp4; codecs=hevc"> <source src="video.webm" type="video/webm"> <source src="video.mp4" type="video/mp4"> </video>We then could re-add the

autoplayover a certain threshold (e.g. 1000px):/* By Doug Sillars. https://dougsillars.com/2020/01/06/hiding-videos-on-the-mbile-web/ */ <script> window.onload = addAutoplay(); var videoLocation = document.getElementById("hero-video"); function addAutoplay() { if(window.innerWidth > 1000){ videoLocation.setAttribute("autoplay",""); }; } </script>

Number of Stalls by device and network speed. Faster devices on faster networks have virtually no stalls. According to Doug Sillars’ research. (Large preview) Video playback performance is a story on its own, and if you’d like to dive into it in details, take a look at another Doug Sillars' series on The Current State of Video and Video Delivery Best Practices that include details on video delivery metrics, video preloading, compression and streaming. Finally, you can check how slow or fast your video streaming will be with Stream or Not.

- Is web font delivery optimized?

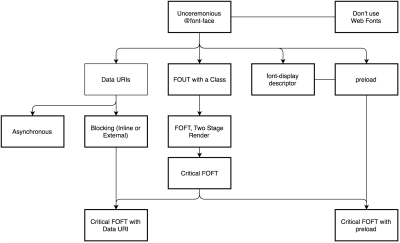

The first question that’s worth asking is if we can get away with using UI system fonts in the first place — we just need to make sure to double check that they appear correctly on various platforms. If it’s not the case, chances are high that the web fonts we are serving include glyphs and extra features and weights that aren’t being used. We can ask our type foundry to subset web fonts or if we are using open-source fonts, subset them on our own with Glyphhanger or Fontsquirrel. We can even automate our entire workflow with Peter Müller’s subfont, a command line tool that statically analyses your page in order to generate the most optimal web font subsets, and then inject them into our pages.WOFF2 support is great, and we can use WOFF as fallback for browsers that don’t support it — or perhaps legacy browsers could be served system fonts. There are many, many, many options for web font loading, and we can choose one of the strategies from Zach Leatherman’s "Comprehensive Guide to Font-Loading Strategies," (code snippets also available as Web font loading recipes).

Probably the better options to consider today are Critical FOFT with

preloadand "The Compromise" method. Both of them use a two-stage render for delivering web fonts in steps — first a small supersubset required to render the page fast and accurately with the web font, and then load the rest of the family async. The difference is that "The Compromise" technique loads polyfill asynchronously only if font load events are not supported, so you don’t need to load the polyfill by default. Need a quick win? Zach Leatherman has a quick 23-min tutorial and case study to get your fonts in order.In general, it might be a good idea to use the

preloadresource hint to preload fonts, but in your markup include the hints after the link to critical CSS and JavaScript. Withpreload, there is a puzzle of priorities, so consider injectingrel="preload"elements into the DOM just before the external blocking scripts. According to Andy Davies, "resources injected using a script are hidden from the browser until the script executes, and we can use this behaviour to delay when the browser discovers thepreloadhint." Otherwise, font loading will cost you in the first render time.

When everything is critical, nothing is critical. preload only one or a maximum of two fonts of each family. (Image credit: Zach Leatherman – slide 93) (Large preview) It’s a good idea to be selective and choose files that matter most, e.g. the ones that are critical for rendering or that would help you avoiding visible and disruptive text reflows. In general, Zach advises to preload one or two fonts of each family — it also makes sense to delay some font loading if they are less critical.

It has become quite common to use

local()value (which refers to a local font by name) when defining afont-familyin the@font-facerule:/* Warning! Not a good idea! */ @font-face { font-family: Open Sans; src: local('Open Sans Regular'), local('OpenSans-Regular'), url('opensans.woff2') format ('woff2'), url('opensans.woff') format('woff'); }The idea is reasonable: some popular open-source fonts such as Open Sans are coming pre-installed with some drivers or apps, so if the font is available locally, the browser doesn’t need to download the web font and can display the local font immediately. As Bram Stein noted, "though a local font matches the name of a web font, it most likely isn’t the same font. Many web fonts differ from their "desktop" version. The text might be rendered differently, some characters may fall back to other fonts, OpenType features can be missing entirely, or the line height may be different."

Also, as typefaces evolve over time, the locally installed version might be very different from the web font, with characters looking very different. So, according to Bram, it’s better to never mix locally installed fonts and web fonts in

@font-facerules. Google Fonts has followed suit by disablinglocal()on the CSS results for all users, other than Android requests for Roboto.Nobody likes waiting for the content to be displayed. With the

font-displayCSS descriptor, we can control the font loading behavior and enable content to be readable immediately (withfont-display: optional) or almost immediately (with a timeout of 3s, as long as the font gets successfully downloaded — withfont-display: swap). (Well, it’s a bit more complicated than that.)However, if you want to minimize the impact of text reflows, we could use the Font Loading API (supported in all modern browsers). Specifically that means for every font, we’d creata a

FontFaceobject, then try to fetch them all, and only then apply them to the page. This way, we group all repaints by loading all fonts asynchronously, and then switch from fallback fonts to the web font exactly once. Take a look at the code snippet):/* Load two web fonts using JavaScript */ /* Zach Leatherman: https://noti.st/zachleat/KNaZEg/the-five-whys-of-web-font-loading-performance#sWkN4u4 */ // Remove existing @font-face blocks // Create two let font = new FontFace("Noto Serif", /* ... */); let fontBold = new FontFace("Noto Serif, /* ... */); // Load two fonts let fonts = await Promise.all([ font.load(), fontBold.load() ]) // Group repaints and render both fonts at the same time! fonts.forEach(font => documents.fonts.add(font));To initiate a very early fetch of the fonts with Font Loading API in use, Adrian Bece suggests to add a non-breaking space

nbsp;at the top of thebody, and hide it visually witharia-visibility: hiddenand a.hiddenclass:<body class="no-js"> <!-- ... Website content ... --> <div aria-visibility="hidden" class="hidden" style="font-family: '[web-font-name]'"> <!-- There is a non-breaking space here --> </div> <script> document.getElementsByTagName("body")[0].classList.remove("no-js"); </script> </body>This goes along with CSS that has different font families declared for different states of loading, with the change triggered by Font Loading API once the fonts have successfully loaded:

body:not(.wf-merriweather--loaded):not(.no-js) { font-family: [fallback-system-font]; /* Fallback font styles */ } .wf-merriweather--loaded, .no-js { font-family: "[web-font-name]"; /* Webfont styles */ } /* Accessible hiding */ .hidden { position: absolute; overflow: hidden; clip: rect(0 0 0 0); height: 1px; width: 1px; margin: -1px; padding: 0; border: 0; }If you ever wondered why despite all your optimizations, Lighthouse still suggests to eliminate render-blocking resources (fonts), in the same article Adrian Bece provides a few techniques to make Lighthouse happy, along with a Gatsby Omni Font Loader, a performant asynchronous font loading and Flash Of Unstyled Text (FOUT) handling plugin for Gatsby.

Now, many of us might be using a CDN or a third-party host to load web fonts from. In general, it’s always better to self-host all your static assets if you can, so consider using google-webfonts-helper, a hassle-free way to self-host Google Fonts. And if it’s not possible, you can perhaps proxy the Google Font files through the page origin.

It's worth noting though that Google is doing quite a bit of work out of the box, so a server might need a bit of tweaking to avoid delays (thanks, Barry!)

This is quite important especially as since Chrome v86 (released October 2020), cross-site resources like fonts can’t be shared on the same CDN anymore — due to the partitioned browser cache. This behavior was a default in Safari for years.

But if it’s not possible at all, there is a way to get to the fastest possible Google Fonts with Harry Roberts' snippet:

<!-- By Harry Roberts. https://csswizardry.com/2020/05/the-fastest-google-fonts/ - 1. Preemptively warm up the fonts’ origin. - 2. Initiate a high-priority, asynchronous fetch for the CSS file. Works in - most modern browsers. - 3. Initiate a low-priority, asynchronous fetch that gets applied to the page - only after it’s arrived. Works in all browsers with JavaScript enabled. - 4. In the unlikely event that a visitor has intentionally disabled - JavaScript, fall back to the original method. The good news is that, - although this is a render-blocking request, it can still make use of the - preconnect which makes it marginally faster than the default. --> <!-- [1] --> <link rel="preconnect" href="https://fonts.gstatic.com" crossorigin /> <!-- [2] --> <link rel="preload" as="style" href="$CSS&display=swap" /> <!-- [3] --> <link rel="stylesheet" href="$CSS&display=swap" media="print" onload="this.media='all'" /> <!-- [4] --> <noscript> <link rel="stylesheet" href="$CSS&display=swap" /> </noscript>Harry’s strategy is to pre-emptively warm up the fonts’ origin first. Then we initiate a high-priority, asynchronous fetch for the CSS file. Afterwards, we initiate a low-priority, asynchronous fetch that gets applied to the page only after it’s arrived (with a print stylesheet trick). Finally, if JavaScript isn’t supported, we fall back to the original method.

Ah, talking about Google Fonts: you can shave up to 90% of the size of Google Fonts requests by declaring only characters you need with `&text`. Plus, the support for font-display was added recently to Google Fonts as well, so we can use it out of the box.

A quick word of caution though. If you use

font-display: optional, it might be suboptimal to also usepreloadas it will trigger that web font request early (causing network congestion if you have other critical path resources that need to be fetched). Usepreconnectfor faster cross-origin font requests, but be cautious withpreloadas preloading fonts from a different origin wlll incur network contention. All of these techniques are covered in Zach’s Web font loading recipes.On the other hand, it might be a good idea to opt out of web fonts (or at least second stage render) if the user has enabled Reduce Motion in accessibility preferences or has opted in for Data Saver Mode (see

Save-Dataheader), or when the user has a slow connectivity (via Network Information API).We can also use the

prefers-reduced-dataCSS media query to not define font declarations if the user has opted into data-saving mode (there are other use-cases, too). The media query would basically expose if theSave-Datarequest header from the Client Hint HTTP extension is on/off to allow for usage with CSS. Currently supported only in Chrome and Edge behind a flag.Metrics? To measure the web font loading performance, consider the All Text Visible metric (the moment when all fonts have loaded and all content is displayed in web fonts), Time to Real Italics as well as Web Font Reflow Count after first render. Obviously, the lower both metrics are, the better the performance is.

What about variable fonts, you might ask? It’s important to notice that variable fonts might require a significant performance consideration. They give us a much broader design space for typographic choices, but it comes at the cost of a single serial request opposed to a number of individual file requests.

While variable fonts drastically reduce the overall combined file size of font files, that single request might be slow, blocking the rendering of all content on a page. So subsetting and splitting the font into character sets still matter. On the good side though, with a variable font in place, we’ll get exactly one reflow by default, so no JavaScript will be required to group repaints.

Now, what would make a bulletproof web font loading strategy then? Subset fonts and prepare them for the 2-stage-render, declare them with a

font-displaydescriptor, use Font Loading API to group repaints and store fonts in a persistent service worker’s cache. On the first visit, inject the preloading of scripts just before the blocking external scripts. You could fall back to Bram Stein’s Font Face Observer if necessary. And if you’re interested in measuring the performance of font loading, Andreas Marschke explores performance tracking with Font API and UserTiming API.Finally, don’t forget to include

unicode-rangeto break down a large font into smaller language-specific fonts, and use Monica Dinculescu’s font-style-matcher to minimize a jarring shift in layout, due to sizing discrepancies between the fallback and the web fonts.Alternatively, to emulate a web font for a fallback font, we can use @font-face descriptors to override font metrics (demo, enabled in Chrome 87). (Note that adjustments are complicated with complicated font stacks though.)

Does the future look bright? With progressive font enrichment, eventually we might be able to "download only the required part of the font on any given page, and for subsequent requests for that font to dynamically ‘patch’ the original download with additional sets of glyphs as required on successive page views", as Jason Pamental explains it. Incremental Transfer Demo is already available, and it’s work in progress.

Table Of Contents

- Getting Ready: Planning And Metrics

- Setting Realistic Goals

- Defining The Environment

- Assets Optimizations

- Build Optimizations

- Delivery Optimizations

- Networking, HTTP/2, HTTP/3

- Testing And Monitoring

- Quick Wins

- Everything on one page

- Download The Checklist (PDF, Apple Pages, MS Word)

- Subscribe to our email newsletter to not miss the next guides.

Further Reading

- The Fight For The Main Thread

- How To Hack Your Google Lighthouse Scores In 2024

- Scaling Success: Key Insights And Practical Takeaways

- Web Quality Assurance: From User Requirements To Web Risk Management