Don’t Let Your Brain Deceive You: Avoiding Bias In Your UX Feedback

You know that user feedback is crucial — after all, your users will decide whether your app succeeds or not — but how do you know whether users are being fair and objective in their feedback? We can tell you: They won’t be. All of your users will be giving you biased feedback. They can’t help it.

When soliciting and listening to user feedback, you will inevitably run into bias on both sides of the coin: Biases will influence the people providing feedback, and your own biases will influence the way you receive that feedback.

It’s important to be aware of this, especially when reviewing comments about your user experience (UX). Accurate and unbiased feedback is essential to developing the best possible version of your app. Although you can’t erase your own biases (or those of your users), you can take steps to overcome common biases once you know what they are and how they might appear. The next time you ask your users for input, keep bias in mind and evaluate how you respond to users’ comments. Is your action (or inaction) driven by bias?

Collecting And Analyzing Data

When determining qualitative sample sizes in user research, researchers must know how to make the most of the data they collect. Your sample size won’t matter if you haven’t asked good questions and done thorough analysis. Read a related article →

There are dozens of cognitive biases that take many different forms, although a few dominating types emerge frequently for product teams seeking user feedback. In this article, we’ll take a closer look at four of the most common types of cognitive biases that pop up when collecting and interpreting UX feedback — and how you can nip these biases in the bud, before they skew your production process:

Let’s dig into the first cognitive bias.

Confirmation Bias

This is probably the most well-known bias encountered by people of all professions. Psychologist Daniel Kahneman, who first introduced the concept of confirmation bias together with mathematical psychologist Amos Tversky, says that confirmation bias exists “when you have an interpretation, and you adopt it, and then, top down, you force everything to fit that interpretation.” Confirmation bias occurs when you gravitate towards responses that align with your own beliefs and preconceptions.

Solely accepting feedback that aligns with your established narrative creates an echo chamber that will severely affect your approach to UX design. One dangerous effect of confirmation bias is the backfire effect, in which you begin to reject any results that prove your opinions wrong. As a designer, you are tasked with creating the UX that best serves your audience, but your design will be based in part on your subjective tastes, beliefs and background. Sometimes, as we learned firsthand, this bias can sneak its way into your process — not so much in how you interpret user feedback, but in how you ask for it.

In the early years of my agency designing web and mobile apps for clients, we used to have our UX designers write user surveys and conduct interviews to get feedback on products. After all, the designers understood the UX like no one else, and, ultimately, they’d be the ones to make any changes. Strangely, after doing this for about a year, we noticed that we weren’t getting a lot of actionable feedback. We began to doubt the value of even creating these surveys. Before tossing them out entirely, we experimented by removing the UX designers from the feedback process. We had one of our quality-assurance (QA) engineers write the user survey and collect the feedback — and we quickly found the results were vastly more interesting and actionable.

Although our UX designers were open to hearing feedback, we realized they were subconsciously formulating survey and interview questions in a manner that would easily confirm their own preconceptions about the design they created. For instance, our UX designers asked, “Did the wide variety of products available make it difficult to find the specific product you wanted?” This phrasing led our respondents to perceive that finding a product was difficult in the first place — leaving no room for those who found it easy to reflect that in their answers. The question also suggested a cause of difficulty (the wide variety of products), leaving no room for respondents to offer other potential reasons for difficulty finding a product.

When our QA engineers took the reins, they wrote the question as, “Did you have any difficulty finding the product you wanted? If so, why?” Having no strong preexisting beliefs about the design, they posed an unbiased question — leaving room for more genuine answers from the respondents who had difficulty finding products, as well as those who didn’t. By following up with an open-ended “Why?,” we were able to collect more diverse and informative answers, helping us to learn more about the various reasons that respondents found difficulty with our design.

Confirmation bias often shows up when one is creating user surveys. In your survey, you might inadvertently ask leading questions, phrased in a way that generates answers that validate what you already believe. Our UX designers asked leading questions like, “Did the branding provide a sense of professionalism and trust?” Questions like this don’t allow space for users to provide negative or opposing feedback. By removing our designers from the feedback process, the questions naturally became less biased in phrasing — our QA engineers asked non-leading questions like, “What sort of impressions did the app’s look and feel provide?” As a result, we began to see far more objective and truly helpful feedback coming from users.

Avoiding Confirmation Bias

Overcoming confirmation bias requires collecting feedback from a diverse group of people. The bigger the pool of users providing feedback, the more perspectives you add to the mix. Don’t survey or interview users from only one group, demographic or background — do your best to get a large sample size filled with users who represent all demographics in your target market. This way, the feedback you receive won’t be limited to one group’s set of preconceptions.

Write survey questions carefully to avoid leading questions. Instead of asking, “How much did you like feature X of the app?,” you might ask, “Rate your satisfaction with feature X on the following scale” (and provide a scale that ranges from “strongly dislike” to “strongly like”). The first phrasing suggests that the user should like the feature in question, whereas the second phrasing doesn’t make an inherent suggestion. Have someone else read your survey questions before sending them out to users, to check that they sound impartial.

UX designers can also avoid falling prey to confirmation bias by using more quantitative data — although, as you’ll see below, even interpretation of numerical data isn’t immune to bias.

Framing Bias

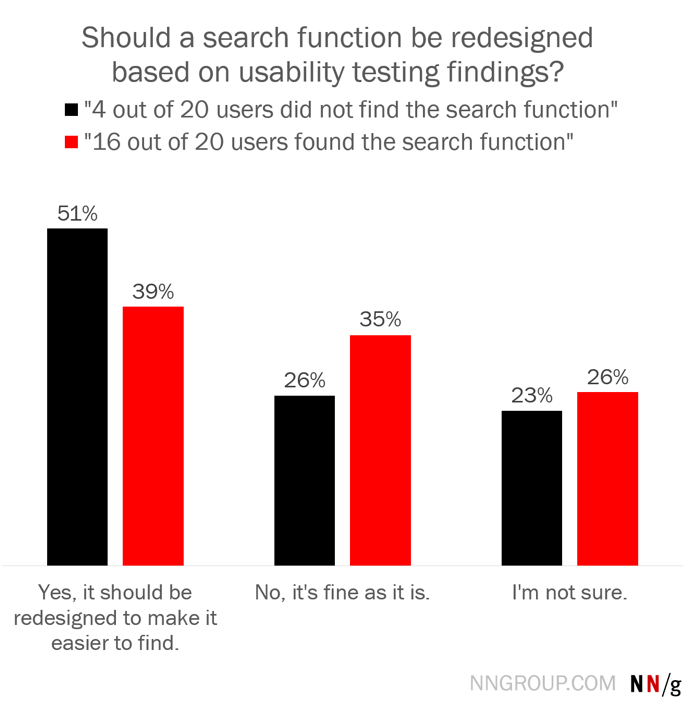

Framing bias is based on how you choose to frame the user feedback you’ve received. This kind of bias can make a designer interpret an objective metric in a positive or negative light. Nielsen Norman Group offers a fascinating example that describes the results of a user feedback survey in two ways. In the first, 4 out of 20 users said they could not find the search function on a website; in the second, 16 out of 20 users stated that they found the search function.

Nielsen Norman Group presented these two results — which communicate the same information — to a group of UX designers, with half receiving the success rate (16 out of 20) and half the failure rate (4 out of 20). It found that only 39% of those who received the positive statement were likely to call for a redesign, compared to 51% of respondents who received the failure rate. Despite the metric being the same, a framing bias led to these professional UX designers behaving differently.

The presence of framing bias when analyzing data can lead to subsequent effects, such as the clustering illusion, in which people mistakenly perceive patterns in data that are actually coincidental, or the anchoring effect, in which people give much more weight to the first piece of data they look at than the rest of the data. These mental traps can influence the decisions you make in the best interest of the product.

Avoiding Framing Bias

You can avoid framing bias by becoming more self-aware of how you look at data — and by adding more frames.

For every piece of feedback you assess, ask yourself how you’re framing the data. This awareness will help you learn not to take your first interpretation as a given, and to understand why your perspective feels positive or negative. Then, identify at least one or two alternative frames you could use to phrase the same result. Let’s say one of your survey results shows that 70% of users feel your UI is intuitive. This gives you a surge of pride and validation, but you also recognize that it’s framed as a positive. Using an alternative frame, you write the result again as such: 30% of users do not feel the UI is intuitive. By looking at both frames, you gain a less biased and more well-rounded perception of what this data means for your product.

Be wise enough to admit when you’re unsure of what action to take based on your data. Get a second opinion from someone on your team. If one piece of feedback seems particularly important and difficult to interpret, consider sending out a new survey or gathering more feedback on that topic. Perhaps you could ask those users who don’t feel your UI is intuitive to elaborate on specific aspects of the UI (colors, button placement, text, etc.). What specific, impartial questions could you create to gain deeper insight from users?

Friendliness Bias

Of course, you want to be civil and professional with the people who provide UX feedback, but it doesn’t pay to be too friendly. In other words, you don’t want to be so accommodating that it skews their responses.

Friendliness bias — also called acquiescence bias or user research bias — occurs when the people providing feedback tell you the answers they think you want to hear. Sometimes, this happens because they think fondly of you and respect your professional opinion, but the reason can also be less flattering.

People might tell you what you want to hear because they’re tired of being questioned, and they think positive answers will get them out of the room (or out of the online survey) faster. This is the principle of least effort, which states that people will try to use the smallest amount of thought, time and energy to avoid resistance and complete a task. This principle has probably already influenced the usability of your UX design, but you might not have considered how it comes into play when collecting feedback.

Whatever the cause, friendliness bias can tarnish the hard work and market research you’ve conducted, giving you ungenuine data you can’t effectively use.

Avoiding Friendliness Bias

Friendliness bias can be avoided by removing yourself from the picture, because most people don’t like to give unfavorable feedback face to face.

If gathering UX feedback involves in-person questionnaires or focus groups, have someone outside of your development team serve as a facilitator. The facilitator should make it clear that he or she is not the one responsible for the design of the product. This way, people might feel more comfortable providing honest — and negative — feedback.

Collecting feedback digitally is also a helpful way to reduce the chance of your data being compromised by friendliness bias. People might open up more when sitting behind a screen, because they don’t have to face the reactions of the survey’s providers.

Be mindful, especially if you go the digital route, of survey fatigue. When you ask too many questions, your users might begin to tire partway through the survey. This can result in people simply selecting answers at random (or choosing the most favorable answers) just to finish faster and expend the least amount of effort. To avoid friendliness bias due to survey fatigue, keep surveys as short as possible and phrase the questions in very simple terms. Don’t make all questions required, and edit the survey diligently to cut all questions that aren’t truly relevant or necessary.

False-Consensus Bias

This form of bias happens when developers overestimate the number of people who will agree with their idea or design, creating a false consensus. Put simply, false consensus is the assumption that others will think the same way as you.

A 1976 Stanford study asked 104 college students to walk around campus wearing a sandwich board advertising a restaurant. The study found that 62% of those who agreed to wear the sign believed others would respond the same way, and that 67% of students who refused to wear the sign thought their peers would also respond negatively. The fact that both of these groups formed a majority in favor of their personal belief is an example of false-consensus bias.

As outlined above, our own UX designers fell into false-consensus bias when writing user survey questions, unintentionally phrasing questions with the assumption that users would appreciate the same UX features that the designers appreciated. Even though the core goal in UX design is to set aside your personal beliefs in favor of the wants and needs of your audience, you can’t help but see your product through your own lens — making it difficult to imagine that others would see it any other way. This underscores the importance of having team members with different backgrounds (especially those with expertise outside of UX design) be involved in the feedback process.

Avoiding False-Consensus Bias

False-consensus bias can be avoided by identifying and articulating your own assumptions. When you begin creating a user survey or crafting a test group, ask yourself, “What do I think the results of this feedback will be?” Write them down — these are your assumptions. Even better, ask a friend or coworker to listen to you describe the product, and have them write down the assumptions and opinions they hear from you.

Once you’re aware of your perceptions, you can design the feedback process to ensure you don’t favor your own opinions. With your assumptions sitting in front of you, try this exercise: Pretend every single one of your assumptions is wrong. If this is the case, which of these assumptions would be the riskiest to your product’s success? Which ones would cause widespread dissatisfaction across users? Craft questions for your users that challenge those risky assumptions.

Just as with confirmation bias, it’s important to collect feedback from a wide range of users, across all demographics and backgrounds. Ensure you’re not just surveying people who work closely with you or who come from a similar background — these people are likely to share the same opinions and preconceptions as you, which can reinforce your false consensus.

Breaking Down Biases Makes User Feedback More Valuable

Bias is universal, but so too are the methods you can take to avoid it. People — including you — can’t lose their own biases, but that doesn’t mean you have to let them interfere with your work. By simply understanding what each bias is and by breaking down the ways that it appears during the feedback process, you can put measures in place to overcome misleading preconceptions and gather the most impartial feedback possible.

Ensure that all of your questions are carefully worded and edited, because this will improve clarity and maintain participant focus. To avoid skewed data, always involve as large and diverse a group as possible, and try to remove yourself from the feedback — both in person as the facilitator of the survey or test group, and emotionally as the reviewer of the comments. This will encourage participants to answer more honestly about their experience with your UX, while preventing you from projecting your own assumptions and framing onto their feedback.

You might find it helpful to bring in a second opinion on the reviews as you start to establish bias awareness as a permanent part of your feedback and testing practices. It’s not an easy process, but when you can reduce the influence of bias on your work, you can get to what really matters: designing a better experience for your users.

Further Reading

- Using Friction As A Feature In Machine Learning Algorithms

- Better Context Menus With Safe Triangles

- How To Keep Your Google Analytics Data Safe And Clean

- How To Build Strong Customer Relationships For User Research

Register For Free

Register For Free

Devs love Storyblok - Learn why!

Devs love Storyblok - Learn why!

Get a Free Trial

Get a Free Trial JavaScript Form Builder — Create JSON-driven forms without coding.

JavaScript Form Builder — Create JSON-driven forms without coding.