Front-End Performance 2021: Networking, HTTP/2, HTTP/3

This guide has been kindly supported by our friends at LogRocket, a service that combines frontend performance monitoring, session replay, and product analytics to help you build better customer experiences. LogRocket tracks key metrics, incl. DOM complete, time to first byte, first input delay, client CPU and memory usage. Get a free trial of LogRocket today.

Table Of Contents

- Getting Ready: Planning And Metrics

- Setting Realistic Goals

- Defining The Environment

- Assets Optimizations

- Build Optimizations

- Delivery Optimizations

- Networking, HTTP/2, HTTP/3

- Testing And Monitoring

- Quick Wins

- Everything on one page

- Download The Checklist (PDF, Apple Pages, MS Word)

- Subscribe to our email newsletter to not miss the next guides.

Networking, HTTP/2, HTTP/3

- Is OCSP stapling enabled?

By enabling OCSP stapling on your server, you can speed up your TLS handshakes. The Online Certificate Status Protocol (OCSP) was created as an alternative to the Certificate Revocation List (CRL) protocol. Both protocols are used to check whether an SSL certificate has been revoked.However, the OCSP protocol does not require the browser to spend time downloading and then searching a list for certificate information, hence reducing the time required for a handshake.

- Have you reduced the impact of SSL certificate revocation?

In his article on "The Performance Cost of EV Certificates", Simon Hearne provides a great overview of common certificates, and the impact a choice of a certificate may have on the overall performance.As Simon writes, in the world of HTTPS, there are a few types of certificate validation levels used to secure traffic:

- Domain Validation (DV) validates that the certificate requestor owns the domain,

- Organisation Validation (OV) validates that an organisation owns the domain,

- Extended Validation (EV) validates that an organisation owns the domain, with rigorous validation.

It’s important to note that all of these certificates are the same in terms of technology; they only differ in information and properties provided in those certificates.

EV certificates are expensive and time-consuming as they require a human to reviewing a certificate and ensuring its validity. DV certificates, on the other hand, are often provided for free — e.g. by Let’s Encrypt — an open, automated certificate authority that’s well integrated into many hosting providers and CDNs. In fact, at the time of writing, it powers over 225 million websites (PDF), although it makes for only 2.69% of the pages (opened in Firefox).

So what’s the problem then? The issue is that EV certificates do not fully support OCSP stapling mentioned above. While stapling allows the server to check with the Certificate Authority if the certificate has been revoked and then add ("staple") this information to the certificate, without stapling the client has to do all the work, resulting in unnecessary requests during the TLS negotiation. On poor connections, this might end up with noticeable performance costs (1000ms+).

EV certificates aren’t a great choice for web performance, and they can cause a much bigger impact on performance than DV certificates do. For optimal web performance, always serve an OCSP stapled DV certificate. They are also much cheaper than EV certificates and less hassle to acquire. Well, at least until CRLite is available.

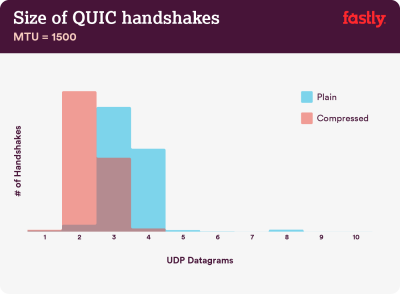

Compression matters: 40–43% of uncompressed certificate chains are too large to fit in a single QUIC flight of 3 UDP datagrams. (Image credit:) Fastly) (Large preview) Note: With QUIC/HTTP/3 upon us, it’s worth noting that the TLS certificate chain is the one variable-sized content that dominates the byte count in the QUIC Handshake. The size varies between a few hundred byes and over 10 KB.

So keeping TLS certificates small matters a lot on QUIC/HTTP/3, as large certificates will cause multiple handshakes. Also, we need to make sure that the certificates are compressed, as otherwise certificate chains would be too large to fit in a single QUIC flight.

You can find way more detail and pointers to the problem and to the solutions on:

- EV Certificates Make The Web Slow and Unreliable by Aaron Peters,

- The impact of SSL certificate revocation on web performance by Matt Hobbs,

- The Performance Cost of EV Certificates by Simon Hearne,

- Does the QUIC handshake require compression to be fast? by Patrick McManus.

- Have you adopted IPv6 yet?

Because we’re running out of space with IPv4 and major mobile networks are adopting IPv6 rapidly (the US has almost reached a 50% IPv6 adoption threshold), it’s a good idea to update your DNS to IPv6 to stay bulletproof for the future. Just make sure that dual-stack support is provided across the network — it allows IPv6 and IPv4 to run simultaneously alongside each other. After all, IPv6 is not backwards-compatible. Also, studies show that IPv6 made those websites 10 to 15% faster due to neighbor discovery (NDP) and route optimization. - Make sure all assets run over HTTP/2 (or HTTP/3).

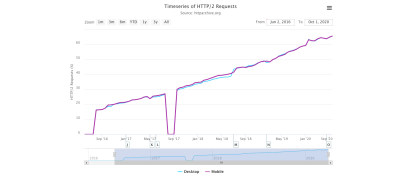

With Google pushing towards a more secure HTTPS web over the last few years, a switch to HTTP/2 environment is definitely a good investment. In fact, according to Web Almanac, 64% of all requests are running over HTTP/2 already.It’s important to understand that HTTP/2 isn’t perfect and has prioritization issues, but it’s supported very well; and, in most cases, you’re better off with it.

A word of caution: HTTP/2 Server Push is being removed from Chrome, so if your implementation relies on Server Push, you might need to revisit it. Instead, we might be looking at Early Hints, which are integrated as experiment in Fastly already.

If you’re still running on HTTP, the most time-consuming task will be to migrate to HTTPS first, and then adjust your build process to cater for HTTP/2 multiplexing and parallelization. Bringing HTTP/2 to Gov.uk is a fantastic case study on doing just that, finding a way through CORS, SRI and WPT along the way. For the rest of this article, we assume that you’re either switching to or have already switched to HTTP/2.

- Properly deploy HTTP/2.

Again, serving assets over HTTP/2 can benefit from a partial overhaul of how you’ve been serving assets so far. You’ll need to find a fine balance between packaging modules and loading many small modules in parallel. At the end of the day, still the best request is no request, however, the goal is to find a fine balance between quick first delivery of assets and caching.On the one hand, you might want to avoid concatenating assets altogether, instead of breaking down your entire interface into many small modules, compressing them as a part of the build process and loading them in parallel. A change in one file won’t require the entire style sheet or JavaScript to be re-downloaded. It also minimizes parsing time and keeps the payloads of individual pages low.

On the other hand, packaging still matters. By using many small scripts, overall compression will suffer and the cost of retrieving objects from the cache will increase. The compression of a large package will benefit from dictionary reuse, whereas small separate packages will not. There’s standard work to address that, but it’s far out for now. Secondly, browsers have not yet been optimized for such workflows. For example, Chrome will trigger inter-process communications (IPCs) linear to the number of resources, so including hundreds of resources will have browser runtime costs.

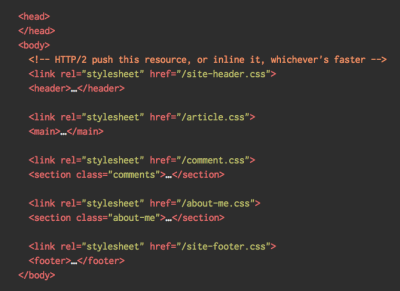

To achieve best results with HTTP/2, consider to load CSS progressively, as suggested by Chrome’s Jake Archibald. Still, you can try to load CSS progressively. In fact, in-body CSS no longer blocks rendering for Chrome. But there are some prioritization issues so it’s not as straightforward, but worth experimenting with.

You could get away with HTTP/2 connection coalescing, which allows you to use domain sharding while benefiting from HTTP/2, but achieving this in practice is difficult, and in general, it’s not considered to be good practice. Also, HTTP/2 and Subresource Integrity don’t always get on.

What to do? Well, if you’re running over HTTP/2, sending around 6–10 packages seems like a decent compromise (and isn’t too bad for legacy browsers). Experiment and measure to find the right balance for your website.

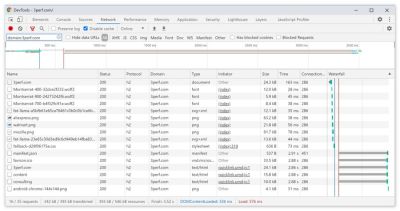

- Do we send all assets over a single HTTP/2 connection?

One of the main advantages of HTTP/2 is that it allows us to send assets down the wire over a single connection. However, sometimes we might have done something wrong — e.g. have a CORS issue, or misconfigured thecrossoriginattribute, so the browser would be forced to open a new connection.To check whether all requests use a single HTTP/2 connection, or something’s misconfigured, enable the "Connection ID" column in DevTools → Network. E.g., here, all requests share the same connection (286) — except manifest.json, which opens a separate one (451).

- Do your servers and CDNs support HTTP/2?

Different servers and CDNs (still) support HTTP/2 differently. Use CDN Comparison to check your options, or quickly look up how your servers are performing and which features you can expect to be supported.Consult Pat Meenan’s incredible research on HTTP/2 priorities (video) and test server support for HTTP/2 prioritization. According to Pat, it’s recommended to enable BBR congestion control and set

tcp_notsent_lowatto 16KB for HTTP/2 prioritization to work reliably on Linux 4.9 kernels and later (thanks, Yoav!). Andy Davies did a similar research for HTTP/2 prioritization across browsers, CDNs and Cloud Hosting Services.While on it, double check if your kernel supports TCP BBR and enable it if possible. It’s currently used on Google Cloud Platform, Amazon Cloudfront, Linux (e.g. Ubuntu).

- Is HPACK compression in use?

If you’re using HTTP/2, double-check that your servers implement HPACK compression for HTTP response headers to reduce unnecessary overhead. Some HTTP/2 servers may not fully support the specification, with HPACK being an example. H2spec is a great (if very technically detailed) tool to check that. HPACK’s compression algorithm is quite impressive, and it works. - Make sure the security on your server is bulletproof.

All browser implementations of HTTP/2 run over TLS, so you will probably want to avoid security warnings or some elements on your page not working. Double-check that your security headers are set properly, eliminate known vulnerabilities, and check your HTTPS setup.Also, make sure that all external plugins and tracking scripts are loaded via HTTPS, that cross-site scripting isn’t possible and that both HTTP Strict Transport Security headers and Content Security Policy headers are properly set.

- Do your servers and CDNs support HTTP/3?

While HTTP/2 has brought a number of significant performance improvements to the web, it also left quite some area for improvement — especially head-of-line blocking in TCP, which was noticeable on a slow network with a significant packet loss. HTTP/3 is solving these issues for good (article).To address HTTP/2 issues, IETF, along with Google, Akamai and others, have been working on a new protocol that has recently been standardized as HTTP/3.

Robin Marx has explained HTTP/3 very well, and the following explanation is based on his explanation. In its core, HTTP/3 is very similar to HTTP/2 in terms of features, but under the hood it works very differently. HTTP/3 provides a number of improvements: faster handshakes, better encryption, more reliable independent streams, better encryption and flow control. A noteable difference is that HTTP/3 uses QUIC as the transport layer, with QUIC packets encapsulated on top of UDP diagrams, rather than TCP.

QUIC fully integrates TLS 1.3 into the protocol, while in TCP it’s layered on top. In the typical TCP stack, we have a few round-trip times of overhead because TCP and TLS need to do their own separate handshakes, but with QUIC both of them can be combined and completed in just a single round trip. Since TLS 1.3 allows us to set up encryption keys for a consequent connection, from the second connection onward, we can already send and receive application layer data in the first round trip, which is called "0-RTT".

Also, header compression algorithm of HTTP/2 was entirely rewritten, along with its prioritization system. Plus, QUIC support connection migration from Wi-Fi to cellular network via connection IDs in the header of each QUIC packet. Most of the implementations are done in the user space, not kernel space (as it’s done with TCP), so we should be expecting the protocol to be evolving in the future.

Would it all make a big difference? Probably yes, especially having an impact on loading times on mobile, but also on how we serve assets to end users. While in HTTP/2, multiple requests share a connection, in HTTP/3 requests also share a connection but stream independently, so a dropped packet no longer impacts all requests, just the one stream.

That means that while with one large JavaScript bundle the processing of assets will be slowed down when one stream pauses, the impact will be less significant when multiple files stream in parallel (HTTP/3). So packaging still matters.

HTTP/3 is still work in progress. Chrome, Firefox and Safari have implementations already. Some CDNs support QUIC and HTTP/3 already. In late 2020, Chrome started deploying HTTP/3 and IETF QUIC, and in fact all Google services (Google Analytics, YouTube etc.) are already running over HTTP/3. LiteSpeed Web Server supports HTTP/3, but neither Apache, nginx or IIS support it yet, but it’s likely to change quickly in 2021.

The bottom line: if you have an option to use HTTP/3 on the server and on your CDN, it’s probably a very good idea to do so. The main benefit will come from fetching multiple objects simultaneously, especially on high-latency connections. We don’t know for sure yet as there isn’t much research done in that space, but first results are very promising.

If you want to dive more into the specifics and advantages of the protocol, here are some good starting points to check:

- HTTP/3 Explained, a collaborative effort to document the HTTP/3 and the QUIC protocols. Available in various languages, also as PDF.

- Leveling Up Web Performance With HTTP/3 with Daniel Stenberg.

- An Academic’s Guide to QUIC with Robin Marx introduces basic concepts of the QUIC and HTTP/3 protocols, explains how HTTP/3 handles head-of-line blocking and connection migration, and how HTTP/3 is designed to be evergreen (thanks, Simon!).

- You can check if your server is running on HTTP/3 on HTTP3Check.net.

Table Of Contents

- Getting Ready: Planning And Metrics

- Setting Realistic Goals

- Defining The Environment

- Assets Optimizations

- Build Optimizations

- Delivery Optimizations

- Networking, HTTP/2, HTTP/3

- Testing And Monitoring

- Quick Wins

- Everything on one page

- Download The Checklist (PDF, Apple Pages, MS Word)

- Subscribe to our email newsletter to not miss the next guides.

Further Reading

- Meet Hydrogen: A React Framework For Dynamic, Contextual And Personalized E-Commerce

- Improving The Performance Of Wix Websites (Case Study)

- Building A Dynamic Header With Intersection Observer

- React Children And Iteration Methods